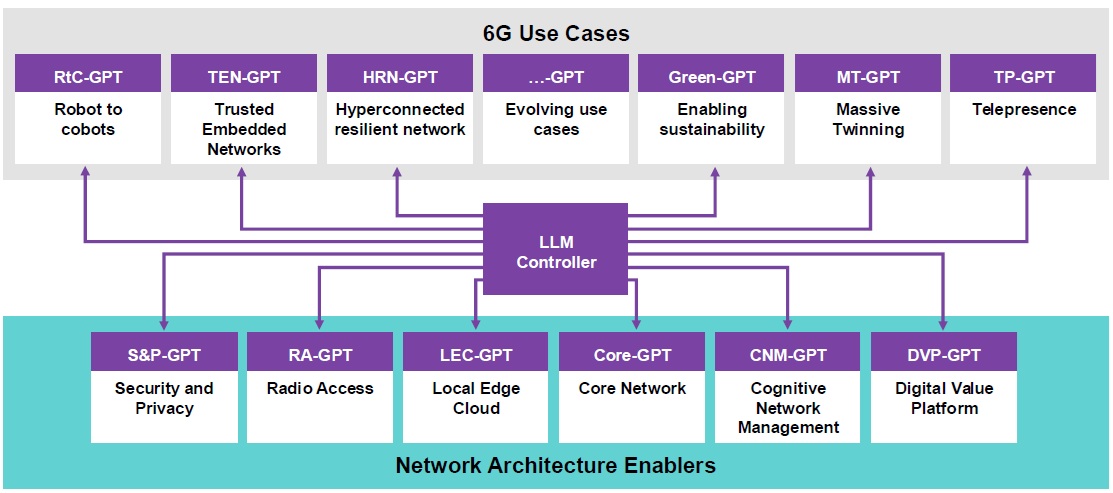

The evolution toward 6G networks marks a significant paradigm shift from static, rule-based architectures to adaptive, AI-driven network. At the forefront of this transformation are Large Language Models (LLMs), particularly Generalized Pretrained Transformers, which offer powerful capabilities for understanding user intent, generating action plans, and executing complex instructions. As such, LLMs are poised to become core enablers of next-generation networks and services. Recognizing this, the authors of this white paper [ 1] discussed AI-native 6G architecture, one that supports the seamless integration, provisioning, updating, and creation of diverse LLMs tailored to specific network functions and applications.

At the heart of this white paper [1] is the concept of the AI-native 6G network that facilitates AI-centric operations across the network. This integrated approach promises transformative benefits such as Intelligent radio and network optimization, improving efficiency and adaptability, context-aware privacy and security.

Image from white paper: Large language models in the 6G enabled computing continuum [1]

The convergence of 6G and advanced AI, embodied in scalable, responsive LLMs, will define the future of intelligent connectivity. The time to architect and invest in AI-native networks is now.

[1] Lovén, Lauri, Miguel Bordallo López, Roberto Morabito, Jaakko Sauvola, and Sasu Tarkoma "Large Language Models in the 6G-Enabled Computing Continuum: a White Paper (2025)"